Client Success Story

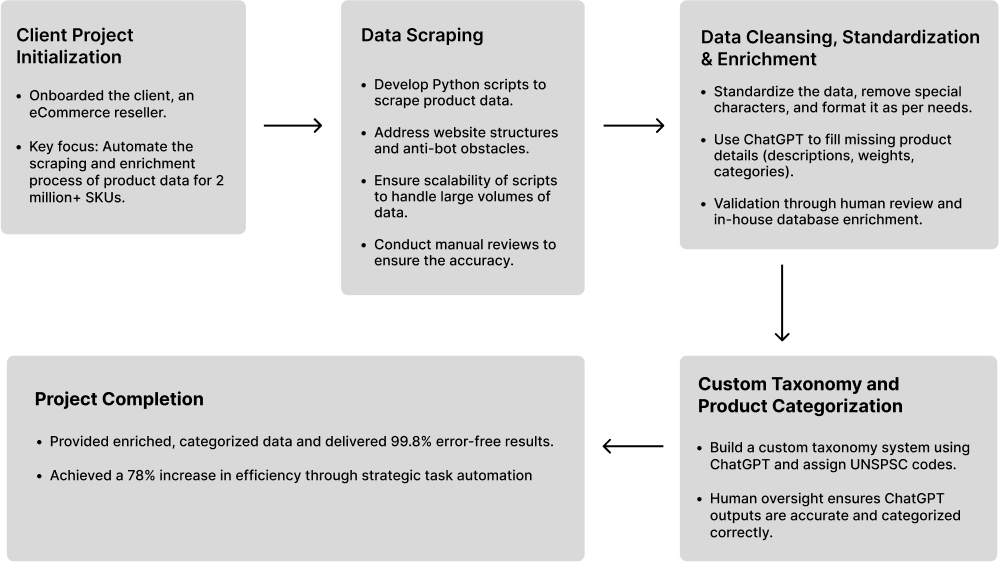

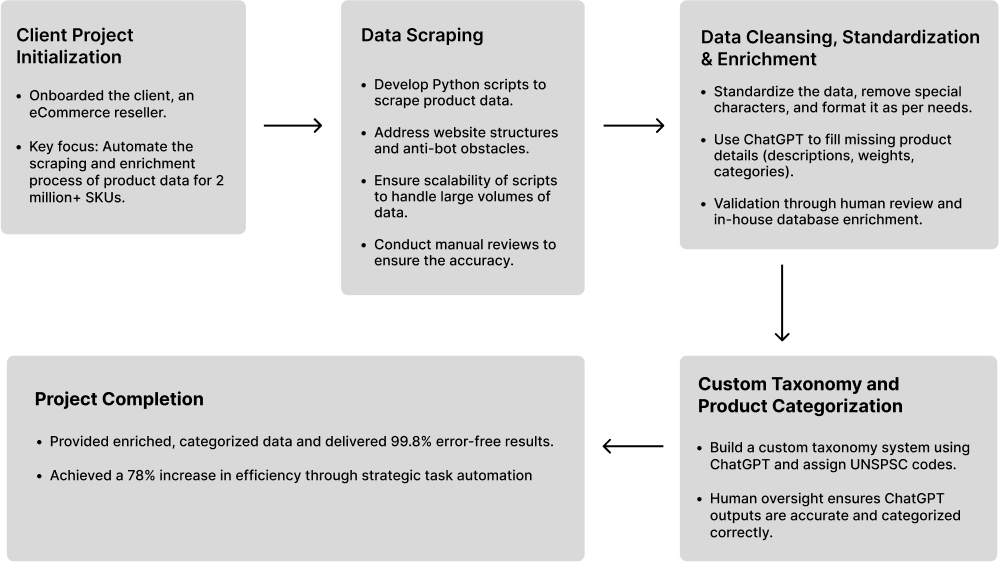

Project Workflow

The client

Founded in 1981, our client has established itself as a reliable eCommerce reseller with long-standing relationships with top brand manufacturers. They specialize in machinery, equipment, and supplies, operating across various sectors such as hardware, plumbing, and electrical goods. Their extensive portfolio includes over 7,000 brands, all managed through two dedicated eCommerce platforms, ensuring a broad and diverse product offering for their vendors.

PROJECT REQUIREMENTS

The client, managing over 2 million products across its websites, needed our help in extracting relevant data and ensuring the information was clean and enriched. Our support was requested in the following key areas:

We were required to perform structured and unstructured data scraping from various brand websites provided by the client. The extraction process needed to be precise, ensuring no information was lost during scraping. Additionally, we were required to cleanse the data by removing any special characters and standardizing the format, enabling quick integration into predefined templates.

The client's website lacked an existing taxonomy and product categorization framework. Our team had to design a custom taxonomy from scratch tailored to their extensive product range. We were further required to accurately categorize all products and assign UNSPSC codes to improve organization, navigation, and user experience on their platform.

The scraped product database contained missing information and different data standards for numerous products. To address this, the client wanted us to leverage AI technologies, specifically ChatGPT, to create customized prompts aimed at filling in the missing data fields and standardizing information to ensure the database was enriched with accurate and relevant information.

PROJECT CHALLENGES

Unlike scraping standardized marketplaces, where predefined scripts can be easily applied, the client required cross-platform product data scraping across multiple brand websites (built on BigCommerce, Shopify, WooCommerce, and others), each with unique structures, restrictions, anti-bot measures, and limitations. We had to develop customized scripts for each website while ensuring rapid turnaround times. This added complexity to the task, as each site demanded different approaches for data extraction.

While manually written scripts are effective for small-scale tasks, they are often inefficient when processing large volumes of data or scraping multiple websites simultaneously. This posed a significant challenge for our scraping team, which had to extract large volumes of data from multiple brand websites.

Data quality presented another substantial challenge, as a significant portion of the scraped product data lacked proper categorization. This necessitated extensive post-processing efforts to ensure data usability. Our team undertook the removal of any special characters and reformatting to align with specified standards.

Using ChatGPT for product categorization, cleansing, and enrichment posed its own challenges. For instance, the AI model occasionally provided outdated UNSPSC codes or ambiguous category suggestions. To mitigate this, we were required to craft highly specific prompts and closely supervise the AI outputs to ensure the data provided was accurate, relevant, and aligned with the client's taxonomy.

Our Solution

To effectively tackle the project requirements, we delegated a dedicated team of six dedicated professionals, including data scraping experts, a prompt engineer, and a QA resource.

Custom Script Creation for Product Data Scraping

The websites to be scraped were provided in batches. Our data scraping team developed custom Python scripts and used extraction tools to extract complex and dynamic content from multiple brand websites. These scripts automated the collection of thousands of product entries with high precision. The extracted data included key attributes such as product categories, descriptions, pricing, taxonomy, and reviews. Our team then categorized and organized this data, removing any special characters, all within the client's specified time frame.

To address scraping challenges, we employed a variety of techniques:

Curl Requests were used to interact directly with the client's web resources, enabling efficient data testing and retrieval while bypassing web interface restrictions.

Python Requests were employed to automate HTTP requests to specified URLs, ensuring consistent and error-free data downloads.

BeautifulSoup Objects were utilized for parsing HTML content, allowing for efficient extraction and cleaning of specific data points for further processing.

To prevent any risk of website blocking and ensure compliance with legal and ethical standards, the client provided clear guidelines on which data could be scraped and which should be excluded.

Taxonomy Development and UNSPSC Categorization

To meet the client’s need for a structured product categorization system, we designed a custom taxonomy based on Google’s framework, tailored to the client’s specific product line. Using ChatGPT and the UNSPSC website, we assigned accurate UNSPSC codes to each product. ChatGPT was used to identify the closest category matches, and our team meticulously verified all codes and categories to ensure there were no errors or discrepancies in the classification.

Data Enrichment Using Custom ChatGPT Development

We purchased API tokens and developed a custom GPT solution using ChatGPT-4 to address partially scraped data and missing product information in the client's database. This automated the process of filling in gaps such as product descriptions, weights, and categories, ensuring data consistency. Our prompt engineers crafted highly specific prompts to guide ChatGPT in delivering the most accurate and relevant results, ensuring the enriched data met the client’s quality standards.

We also leveraged our in-house master database for enrichment, effectively supplementing and improving the quality of records through the integration of additional relevant information.

Optimizing Efficiency Through Automation with Human Oversight

Our approach combines automated processes with strategic human oversight to maximize efficiency and ensure accuracy. As requested by the client, we have successfully automated multiple workflows, including data scraping, enrichment, categorization, and cleansing. While automation significantly accelerates these processes, human expertise remains essential for validation and refinement, ultimately providing the most reliable solution.

| TASK | AUTOMATION | HUMAN INTERVENTION |

|---|---|---|

| Data Scraping | Automated using Python scripts or scraping tools to extract data. | Manual review to verify accuracy, remove unwanted characters, and ensure the data is formatted to the client's specifications. |

| Data Enrichment | ChatGPT was used to fill in missing information in the dataset. | Human resources validated the data and used the in-house master database to ensure all gaps were accurately filled. |

| Product Categorization | ChatGPT provided initial category suggestions and assigned UNSPSC codes. | Resources manually mapped codes using the UNSPSC website, as ChatGPT occasionally provided outdated or ambiguous codes. |

Project Outcomes

Successfully developed and implemented a product categorization system for over 2 million items on the client's platform

Delivered 99.8% error-free data through meticulous cleansing and accurate product categorization

Achieved a 78% increase in efficiency through strategic task automation

Project Workflow

Contact Us

Reach out to our team and get complete support with end-to-end product information management services- from data extraction to cleansing, categorization, and more. To share your business challenges, write to us at